Hardware and Networking | Hosted Software and Apps | Other Protocols and Services | Management Concepts

Hardware and Networking

- Physical Hardware

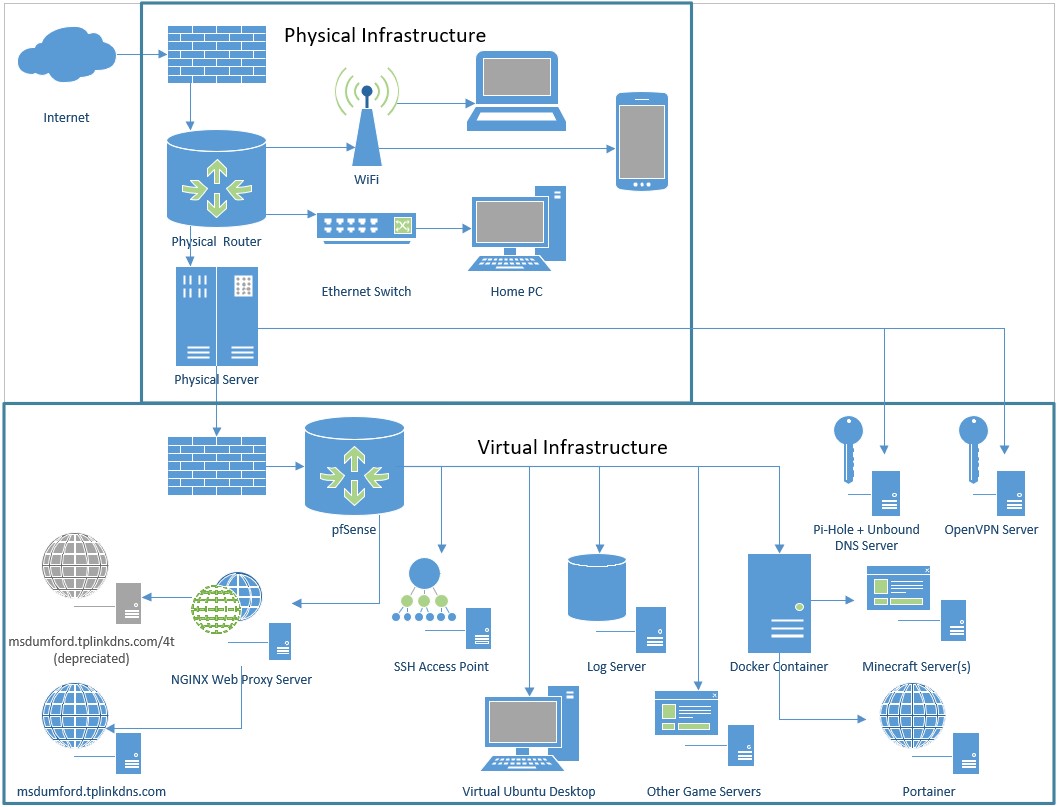

- Router: My physical router connects my home network to my ISP's modem. The router also functions as a layer 3 switch, directing traffic between my physically distinct home and server networks. I can manage its firewall and configure other settings through its local web interface.

- Server: My virtualized server infrastructure is all running on a desktop-style computer tower. I added more RAM, reconfigured the BIOS, and replaced the Windows 10 operating system with the open source hypervisor ProxMox VE.

- Virtualized Hardware

- Router: I wanted to have granular control over the traffic flowing in and out of my virtual network, so the first piece of virtual "hardware" is a pfSense router. It comes equipped with a full suite of firewall tools I use to monitor and protect my network. Like my physical router, pfSense hosts a local web interface that I can connect to from my home network.

- App Servers: I am currently hosting 3 externally accessible services on my virtual application servers. All of my app server VM's are running headless Ubuntu Server distributions. These services are supported by several additional VM's, also running Ubuntu Server, that provide a variety of logging, remote access, and proxy services. In total there are 9 live VM's operating concurrently. All of the VM's are configured to automatically install updates and reboot during off-hours to reduce the manual maintenance overhead.

- Non-production Servers: In addition to the live VM's, I maintain a few testing and template virtual machines. One of these is an Ubuntu Desktop machine that occasionally comes in handy if I need to test something from inside my virtual network. Another is a lab server where I can test new services before deploying them to my production environment.

Hosted Software and Applications

- Docker: The other deliverable for this project is a community Minecraft server that I am hosting. Since I am actually hosting multiple server instances simultaneously, I used Docker to containerize everything in one VM. An excellent open source project I found had already done the hard work to create a Docker image I could use out of the Minecraft server files. I installed Docker-compose to control and store the startup variables, and a Portainer image to manage it all from yet another web interface.

- Hypervisor: I use ProxMox Virtual Environment as the hypervisor for all my virtual machines. It exposes a web interface on my home network that I can use to manage everything from another computer. ProxMox controls the amount of system resources that each VM can access and defines the virtual network architecture. It also provides a number of tools like snapshots and VM cloning that simplify creation and management of many virtual machines.

- Logging: One of my VM's is set up as a centralized logging server. The other VM's use Syslog to send all their logs back to the central server. I have a rolling policy configured to keep a logs for a certain amount of time before replacing them with newer logs to manage space. I could set up out-of-band network monitoring tools that would parse the stored logs, but I have not explored that yet. For now, I manually review the logs for signs of unusual or malicious activity.

- Web Proxy: A VM running NGINX acts as a reverse proxy to direct incoming web traffic. All traffic on ports 80 or 443 is forwarded to this VM. It first redirects any HTTP traffic to HTTPS, then it examines the URL to determine which web host is the intended destination.

- Web Hosts: As a proof of concept, I am running two distinct web hosts that are managed by the proxy. The main site, which you are currently visiting, is hosted on an Apache web server. The other service, which is only accessible through a direct link, is an open source JavaScript application I found on GitHub that runs on its own VM.

- Access Point (SSH): This could also fall under the protocols section, but I decided to list it here because I have a VM that serves as a dedicated SSH access point. I realize that having port 22 exposed to the internet is a dangerous proposition. To protect my network, all SHH traffic is forwarded straight to the dedicated VM. That VM only accepts SSH connections from devices with pre-shared secret keys. On all of my devices that have a pre-shared key, a password is still required to use it. This type of authentication immediately stops any brute-force password attacks without the shared key. The VM also monitors the interface for failed connections with Fail2Ban, and blacklists any addresses that fail authentication. Once an approved device has connected to the access point, regular password-based SSH connections can be made to the other VM's. This configuration allows me to have secure remote access to my servers from outside my home network.

Other Protocols and Services

- Dynamic DNS: You may have noticed that this site has a domain name attached to it. By taking advantage of a service offered by my router's manufacturer, I have access to a free domain of my choosing (as long as it ends with .tplinkdns.com) and a free dynamic DNS service. Whenever my ISP changes my home IP address, the router will automatically propagate the new DNS information, creating a seamless user experience without having to pay for a static public IP address.

- Firewall: My network setup is protected by two distinct firewalls. My physical router has a basic firewall included which blocks any external connection attempts that are not whitelisted. Currently, the only whitelisted services are HTTP(S), SSH, and the Minecraft server ports. It allows most outbound traffic without restriction to avoid interfering with any phones, laptops, or other devices on my home network. The virtual firewall run by my pfSense VM is much more granular. It has similar inbound connection rules, but prevents most outbound communication. The virtual servers are allowed to communicate with a few internet services, such as NTP and ICMP, but very little beyond that. On both firewalls I use aliases and port groups in the rules, so that changing an IP only requires the associated alias to be updated for all the firewall rules to adjust.

- HTTPS: My website is cryptographically signed by an SSL certificate from LetsEncrypt, a free domain-validated certificate service. These certificates are trusted by all major browsers and prove to the client that it really connected to the server that is hosting msdumford.tplinkdns.com. The NGINX reverse proxy is configured to redirect all HTTP connections to HTTPS, ensuring no unencrypted connections are allowed. A script on the website server runs periodically to refresh the certificate before it expires.

- IP Addressing: Within both my home network and my virtual server network, I have configured the routers to use specific private IP subnets. My regular home network uses a class-C address block. A few devices on my home network have static IP addresses, with a large block reserved for DCHP. This is especially important for any guest devices that might connect to my home Wi-Fi. The virtual server network uses a class-A address block, and every server is provided with a static address. This makes writing firewall and port forwarding rules a lot easier.

- NAT and Port Forwarding: Two address translations and a port forward are required to get external connections to the correct virtual servers. First, my physical router directs all inbound connections to the WAN side of the virtual router on the same ports. Then the virtual router sends traffic to specific server IP's based on the destination port. Finally, any services running in Docker forward the traffic they receive from the non-standard port I expose on the router to the default port of the actual service running in the containers. All of this traffic directing effectively tunnels external connections into my virtual environment without allowing them to explore my home network.

- Recursive DNS: In late 2023, I added a Pi-Hole DNS black hole and an Unbound recursive DNS server to my environment. My physical router now redirects all DNS requests to the Pi-Hole, which filters connections based on blacklists. Blacklisted domains are returned as unreachable, tricking sites into thinking the service they tried to access is unavailable. This allows me to block a number of unnecessary web trackers. Pi-Hole forwards any approved domains to Unbound, which recursively resolves the domains without relying on public DNS servers hosted by companies like Google. The combination of Pi-Hole and Unbound gives me additional privacy and control over my network.

- VPN: My physical router supports multiple VPN providers, including the free OpenVPN client. By downloading a generated key from the router and placing that file into the OpenVPN software on another computer, I gain a couple of useful abilities. One is the intended benefit of a VPN; I can use my home network as an extra hop in any web connection, effectively obfuscating the sites I am visiting and ensuring that even my HTTP web traffic is encrypted. A secondary benefit is that through the VPN I have access to my home network as if I was located there. I can use this to remotely control my home desktop or interact with my locally-hosted web interfaces for ProxMox and Portainer from anywhere with an internet connection. In late 2023, I upgraded to a standalone OpenVPN server. VPN traffic is now tunneled through my router to this server, giving me more control over the configuration and forcing the VPN traffic to also use my Pi-Hole and Unbound DNS services.

Management Concepts

- Risk Management: There are always more steps I could take to make my network more secure, more redundant, and more stable. I recognize that running a home server network, on second-hand hardware, with services exposed to the internet, as a lone individual, makes my vulnerability rather high. On the other hand, I believe the threat of compromise and the cost of a breach are both extremely low. My servers do not process credit card data, social security numbers, or any other sensitive information. And while losing all the work I have put into this project would be upsetting, there would not be any financial cost. For these reasons, I have admittedly made some compromises. Every organization goes through a similar risk evaluation process. Having done these calculations for myself, I am satisfied with the level of network security and monitoring that I have put in place for now.

- Backups: Having worked in an enterprise datacenter environment, I know that the ideal situation is to have at least three copies of any data, located in two or more physically distinct sites. However, as discussed above, the resources required to make that happen in my environment are not worth the low return in value. I do keep redundant copies of all personal files I deem important, which includes the files for this website, but that does not extend to my entire server infrastructure. At least one of those copies is an offline storage device that I save backups to periodically.

- Redundancy: This is another concept that is vital to any large datacenter, operating under strict SLA's that guarantee 99.999% or greater availability. I have worked in such a datacenter that had two of everything: two internet connections from different service providers, two power feeds from different substations, two building-scale UPS configurations, all the way down to the individual equipment level. My home network does not have any of that. I do recognize the exposure I face with all of the single points of failure in my network. Unfortunately, primarily due to cost limitations, I am not able to mitigate these liabilities. My server environment offers no SLA's, and I am comfortable with that for now.

- Future Plans: While I do consider my network, as it stands, to be a finished project, there are many things that I would like to add or change as time and budget permit. One idea I have would require two NAS drive bays. One would go on my network and the other would go in the home of a friend or relative. Each would serve as a local network backup location for every device on that network. Then, I would configure them to communicate with each other, creating encrypted remote backups at the other site. Other potential projects could include a media server, other multiplayer game servers, or an email server. I can always do more to secure and monitor my network as well. The possibilities are endless!